Recipe Reader Skill

An Alexa skill which helps novice cooks navigate recipes by voice.

| Space: | Food, Productivity | |

| Roles: | Conversation (VUI) Design, User Testing | |

| Challenge: | Understanding how to guide users through the recipe-selection process. | |

| Finding: | Users preferred to ask for the ingredients list as needed, rather than have it told at predetermined points in the conversation. | |

| Methods: | Personas, User Stories, Sample Dialogs, User Flows, Scripts |

TABLE OF CONTENTS

User Persona

System Persona

Placeona

INFORMATION ARCHITECTURE AND USER FLOWS

Introduction

This case study presents a voice user interface (VUI) project I developed over five weeks for the voice interface class at Career Foundry. A demo of the skill is shown in the video below.

A demo of the skill.

The aim of this recipe reader project was to design a skill for Alexa that allows the user to navigate and interact with a recipe primarily using voice commands. This skill ultimately ended up getting certified and published by Amazon as Quik Bites (which you can enable and try yourself, here).

The brief for the project was as follows:

Allow users to select easy-to-make dishes from several options and to follow step-by-step directions to prepare the meal.

The skill should have the following features:

- Breakfast, lunch, dinner, and snack recipe buckets

- The ability to ask for a different recipe if the user doesn't like a suggestion

- A strategy for dealing with users who miss a preparation step or need something repeated

- A way to check whether your user is ready to move on to the next step

What follows is a step-by-step account of how I developed the project and the thinking that went into each of the steps.

PERSONAS

A persona card for "Liz" - a synthesis of preliminary user interviews I conducted as part of research.

To learn more about how potential users of a recipe reader skill approach cooking, I conducted three short interview sessions with folks who said they may find a recipe reader skill useful.

Based on these interviews, I developed a set of personas which oriented the remainder of the design process.

User Persona

Pictured above is a persona card for Liz, a synthesis of the interviews.

Personas are a common (if often misunderstood or misapplied) tool in the development of products.

In a more typical, screen-based UX design project, personas would be developed to better understand the background and motivations of different types of users that might engage with the product.

This still holds when designing for voice-led interactions, but here the persona is also conveying something about the context(s) of use. This might include the environment where a user is likely to interact with the product or any specific situations in which a user might engage the skill.

Three key observations worth noting about the Liz persona:

- Two of the people I interviewed related to cooking enthusiastically but casually. None of them was a culinary professional.

- While all three interviewees were experienced digital technology users, two of them saw cooking as a low-tech activity and an opportunity to unplug. One of the interviewees regularly used her phone to reference recipes, but found the potential of preforming this task hands-free, via voice, appealing.

- The persona card includes a section titled Speaking Style. This is an important feature of a voice user persona because users are more comfortable communicating with those who are similar to them. Having a clear description of how users communicate with their voices will be helpful when it comes time to develop the speaking style of the System Persona.

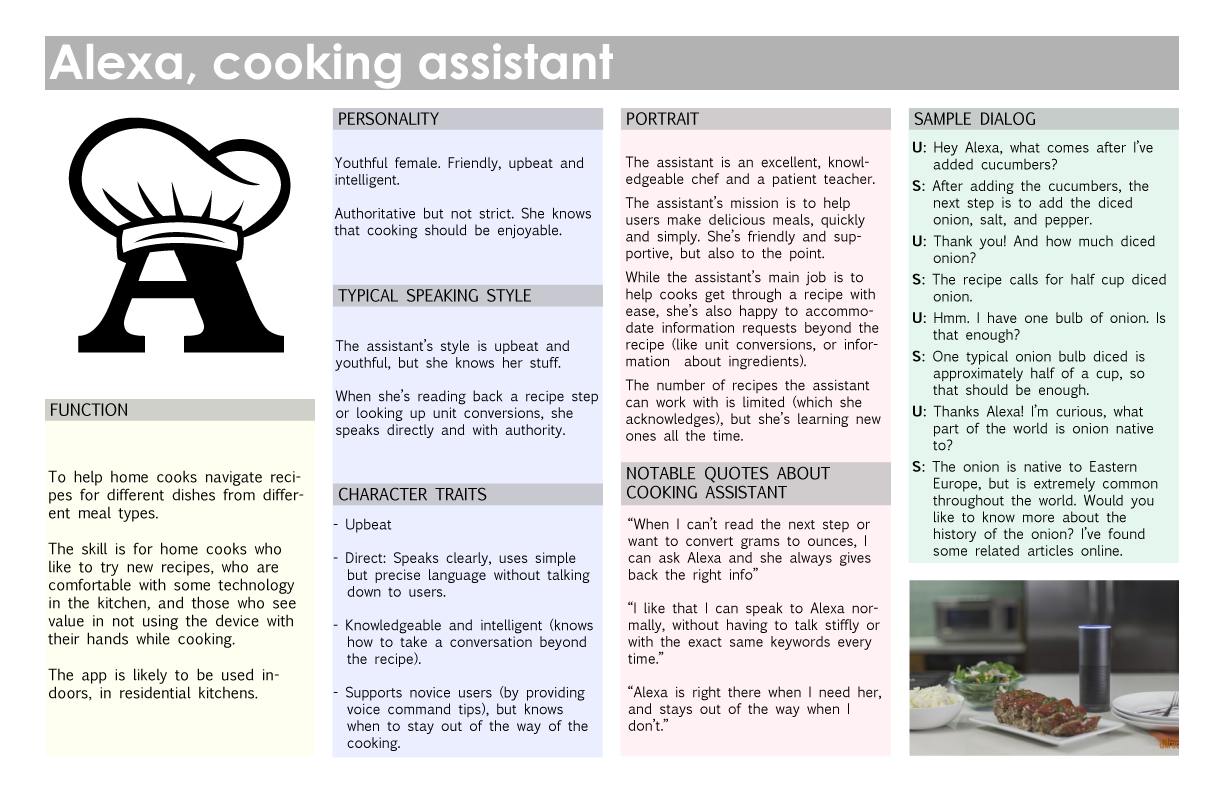

System Persona card for the recipe reader skill.

In developing this system persona, I made sure to outline a personality that potential users would be able to relate to.

Studies suggest that when users interact with interfaces that contain elements of personification, they will relate better and more comfortably to the interface if its manner resembles their own.

It is for this reason—to instill the system with a personality which can appeal to users— that describing a system persona in some detail is important.

Placeona

In addition to developing user and system personas, it can also be useful to identify Placeonas - a description of the situation around use that emphasizes place and the kind of embodied user actions that environment can support.

The placeona concept was originally introduced by Bill Buxton.

In its simplest terms, the placeona answers the following two part question:

A formula to generate placeonas

The two parts of this question can be referenced as the action context and a typical location, respectively.

Based on this activity in a place, the placeona then catalogs the availability of the user's hands, eyes, voice, ears - our primary ways of interacting with interfaces. For example:

The "Biking in the city" placeona is: Hands: busy; Eyes: busy; Ears: free; Voice: free.

With the situations around use described in this placeona format, designers can have a reference point for how users are able or unable to interact with their product in likely scenarios. Specifically in regards to VUI's, developing some placeonas around the product's core functionality should reveal whether voice is always a viable mode of interaction with the service.

For the recipe reader, I worked with the following placeona:

Placeona:

Making dinner at home in the kitchen.

Hands: busy

Eyes: mostly busy

Ears: free

Voice: free

With voice and hearing available, and vision occasionally available, this action context appeared as a great fit for a voice interface.

USER STORIES

Following these preliminary definitions of personas, I developed a collection of user stories - short statements which, based on background research, sketch a situation where a successful interaction between a user and the product takes place. User stories are typically presented in the first person. They characterize the user in some relevant way, present that user's specific need, and postulate on a product feature that might resolve that need.

These are the user stories which I produced for this project:

- As an inexperienced cook, I want to find simple recipes, so that I can quickly prepare delicious meals.

- As someone with little time, I want to choose recipes from different meal types, to make browsing more efficient.

- As a choosy eater, I want lots of quality recipes, so that I don't get bored.

- As an inexperienced cook, I want to tell the skill when to move on, so that I can move through the recipe at my own pace.

- As a novice cook, I want to know which ingredients are needed for each recipe...

... so that I can imagine what a recipe might taste like.

... so that I can decide whether I want to make it. - When I am cooking, I want hands-free navigation of the recipe, so that I can stay focused on the cooking task.

The user stories exercise is useful in that it pairs realistic user needs with potential product features, thus making sure that the product stays user-centered.

SAMPLE DIALOGS

User stories segue nicely into the sample dialogs method. A sample dialog is an imagined scripted conversation between the user and the system, which elaborates on the scenario presented in the user story. Put differently, sample dialogs are speculations on what a user might want to say and hear in response during an exchange with a system.

Sample dialogs are an important step in this design process because it is here that the tone and manner of the interactions really begins to take shape.

Two sample dialogs are presented below:

System: Here are some breakfast options: toast, cereal, oatmeal. Do you want to make any of these?

U: Yes. Let's make oatmeal.

S: [alt1] Got it. For oatmeal you will need...

S: [alt2] Ok! Here are the ingredients needed for oatmeal.

S: [alt3] Great. Before we get started, make sure you have the following ingredients.

S: [alt 2] You can make breakfast, lunch, or dinner. Which do you want to make?

U: [instead of meal offers a dish name] I want to make tomato soup.

S: [recognizes and adjusts] Got it. For tomato soup you will need...

Note how these sample dialogs make the presumption that the list of ingredients should follow after the user selects the recipe. This seemed natural to me at the time, but actually proved problematic in user testing. More on this later.

A recording of a reading of another sample dialog. From this reading it became apparent that the way ingredients quantities are presented is unclear.

An effort should be made to write the exchanges between system and user as naturally as possible. An excellent way of checking how natural the conversations sound is to act them out with another person. Observations from these early tests should be used to refine the dialogs.

Another recording of a reading of a sample dialog. Note again, how the system presumes that the user wants to hear the ingredients right after picking a recipe. User testing proved this presumption to be inaccurate.

The value sample dialogs bring to the VUI design process is high. From giving stakeholders and design collaborators a clear sense of how the interface will feel and sound, to generating a plausible set of specific user requests and viable system responses, sample dialogs can be considered the first prototypes of VUI's.

The information architecture of the recipes.

After the sample dialogs are sufficiently developed and honed, the next step is to describe them in logical terms, which will in turn help build the actual interaction model.

Here, I first found it helpful to develop the information architecture of how different recipes and the relevant pieces information about them would be related (see diagram above).

With a sense of how the recipe information might be organized, I moved on to breaking down the sample dialogs into logical conversational components and flow charts.

Since I am working in the Alexa platform, one such unit is the "Intent" - a distinct functionality or task the user can accomplish.

Based on the sample dialogs, the following intents capture all the initial core functionalities:

- Pick Recipe Intent

- Recipe Prep Intent

- Ingredients List Check Intent

- Ingredient Quantity Check Intent

- Favorites Save Intent

- Favorites Retrieve Intent

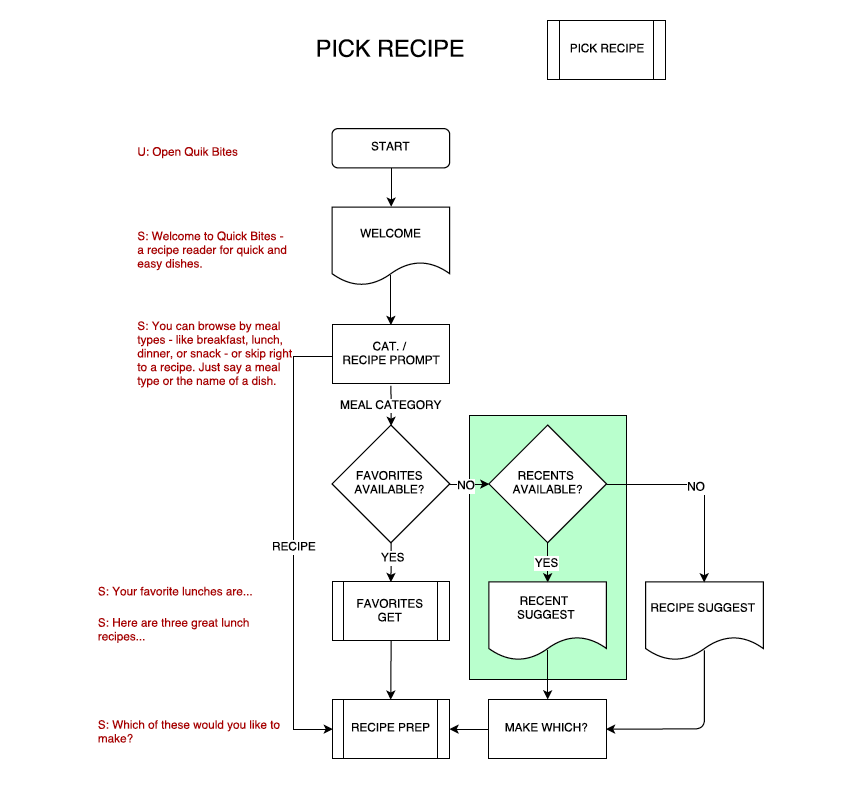

A flow-chart of the pickRecipeIntent. The section highlighted in green shows a modification which makes the skill more contextually responsive.

A flow-chart which documents the ingredientListIntent. Note how this intent is designed to be triggered at any point in the experience, either during recipe browsing, or during recipe prep.

A screenshot of a script section showing the "Welcome" response that is part of the Pick Recipe Intent.

Having worked out the user flows, the next step is developing scripts. In the context of VUI's, the script is a different type of document than conventionally understood. Here, a script is an organized database of system prompts and responses, along with sample user utterances. At the highest level, the script document is organized by intents, or states, each of which is represented as a separate tab in a spreadsheet.

Shown in the script screenshot above is the "Welcome" response that's triggered by specific user utterances. Each intent section might document several prompts and responses in this way. Note the highlighted in green tapered version of the response. If the system is able to recognize context like use frequency by a specific user, a variation on a response can be produced to keep interactions feeling fresh and novel.

To see the full script document see here.

USER TESTING

A test of the skill in the Alexa Skill Kit environment.

With the scripts finalized, it was time to produce the next level of prototype and to test the system. While I could test the functionality of the interaction model with some developer tools (see above), the test that would be most important is from actual users who were not involved in the development of the skill.

Specifically, I was interested in the following research questions:

- can users effectively select a recipe from the available options, with navigation setup by meal type?

- can users advance through the recipe with verbal commands?

- do users have all the necessary information when they are in the instructions state?

Methodology

While the ideal testing setup would task users with actually preparing a dish with the help of the skill, for the sake of cost and efficiency users were instead presented with this scenario hypothetically, and asked to make their way through the experience as if they were preparing a meal.

The test was conducted with the help of the user-sourcing platform UserBob. This platform is a front-end interface for UX professionals which leverages the reach of Mechanical Turk.

To see the full Test Report, click here.

The back-end dashboard of the UserBob testing service.

Findings

In general, the tests were successful in that users were able to effectively navigate to a recipe and go through the instructions flow without problems.

Some unexpected issues did emerge, however. These issues generally fell into one of 6 groups: Barge-In, Flow, Grammar, Navigation, Content, or Unimplemented Feature. Three of these issues are described below.

1. Barge-In

Due to the way certain prompts were phrased, users began to give their response before the prompt finished playing. Since Alexa does not support barge-in, users' responses were not received by the system, and this led to some minor frustrations. In general users were able to recover from this with ease.

Due to the way certain prompts were phrased, users would "barge-in," giving their response before the prompt finished playing.

To fix this, I rewrote the problematic prompts in such a way as to avoid barge-in. While giving users instructions, instead of following each intent option with the utterance to trigger that intent, I gave users all intent options together, and then gave them the utterances to trigger those intents.

Instead of:

The prompt is adjusted to:

2. Flow

At two points in the experience, it was evident that users were confused by where they were in the flow or why certain information was being presented to them.

One such case is the presentation of the dish's ingredients in the first step of the prep instructions, after the user has already picked the dish. This was initially implemented with the presumption that users would commit to a recipe and then would need to know how much of what ingredient is needed as part of the recipe prep process.

However, in testing it was apparent that users often asked for the ingredients before committing to making it. As a result, users would hear the ingredients, decide on a dish, and then hear the ingredients again as part of the first preparation step. This caused redundancy and confusion.

Because of a false presumption about when users would want to hear the full ingredients list, the initial interaction model would give the user an almost identical ingredients list twice, back to back - leading to confusion and loss of trust in the system.

This issue is addressed more closely below, as an un-implemented feature.

An additional issue in the flow category, is the unclear completion of the skill experience. After the instructions end, the system appends a brief CLOSING_MESSAGE to the last instructions step. This wasn't always clear to users, who, in several cases, expected further instruction, as evidenced by their saying "next" after the final step.

Some users found the skill's closing message ambiguous.

To fix this, I made a new closing message, which can be heard in the video demo at the top of the page, that was more noticeable and notable.

3. Unimplemented Feature:

Ingredients Request at Any Point

A user who reported that QuikBites would be useful to her, pointed out an unimplemented feature which has in fact been called for in the initial designs. Namely, the ability to reference the ingredients list not just prior to selecting a dish to make, but also within the instructions flow. Implementing this option, would also solve the problem faced by users who did not ask for ingredients prior to the start of the instructions, as described above in section 2. Flow.

To resolve, I took the listing of ingredients out of the first instructions step. I then added the option to trigger the ingredientsIntent at any point during the session, including, within the instructionsIntent, or prior to it. This gave users the option of when/if to hear the ingredients.

Other issues

Other issues which were much less common among users and are of lower impact to the overall experience of the skill (they are listed in the full User Test Report).

User Testing Summary

In summary, this round of testing was successful in that it showed the skill to be effectively navigable and coherent. Additionally, it revealed several issues with flow, usability, and content which deserved immediate attention.

The QuikBites skill in the Alexa Skill Store.

With this round of research complete, I made the appropriate updates and published the skill on Amazon's skill store. Still, with only one round of initial testing, follow up tests remain outstanding. Refining the interaction model through additional, repeated testing, would be among the most immediate next steps.

Beyond that, the skill's functionality has much room for expansion. Primarily, the skill could benefit greatly from regular addition of new recipes. Even better, would be to give users the option to direct the skill to recipes found online. Other high utility features might include visual, multi-modal elements like images of dishes and text versions of the steps and ingredients. These would be useful on Alexa enabled devices with screens.

Additionally, the favorites feature could be added, especially since the logic and scripts for these are already developed.

Lastly, there are still some dialog management issues which need attention. For instance, if in the course of a session a user rejects a recipe, that recipe remains unavailable in subsequent sessions. This behavior is unintended and needs to be addressed in the code.

In summary, I consider this project a success. In working through the problems typical of a VUI design process, I further developed my skills as a UX designer, and added new ones which are specific to voice. Special thanks to my course mentor, Shyamala Prayaga.

Questions? Comments?

Get in touch!